05-24-2022, 11:41 AM

Lots of folks are always asking about how to download the wiki for off-line use, and over the years we've always used tools such as HTTrack to download and copy the website to save a local copy. The problem with this is that the archive we get is often huge as BLEEP! The last wiki I downloaded and shared was an archive of over 1.1GB in size!

So, I wanted a much simpler alternative, and thus I came up with this little solution:

With all of 130 lines of code, we fetch and save the whole wiki to disk!

NOTE: If you run this, this will save over 600 files into the same directory where this program is ran from! I'd suggest saving it into its own directly and running it from there! Complaining over a messy QB64 folder after this warning wil only get me to laugh at you.

Now, this creates a whole bunch of files with a *.HTML extension to them. You can click on any of these files and open them in your web browser, but you should know in advance that the links between them aren't going to work as they expect a specific file structure on the wiki and we're not providing that here. You'll have to click and open each file individually yourself.

There's a little more work with working with these files, but there's also a couple of large advantages as well:

1) Anytime you run the program, you'll know you have the most up to date version of information from the wiki.

2) You don't have to wait for someone to run HTTrack, grab a copy from the web, and share it with you.

3) The total size of this on disk is about 20MB -- not 1.2GB!! It's a helluva lot more portable!

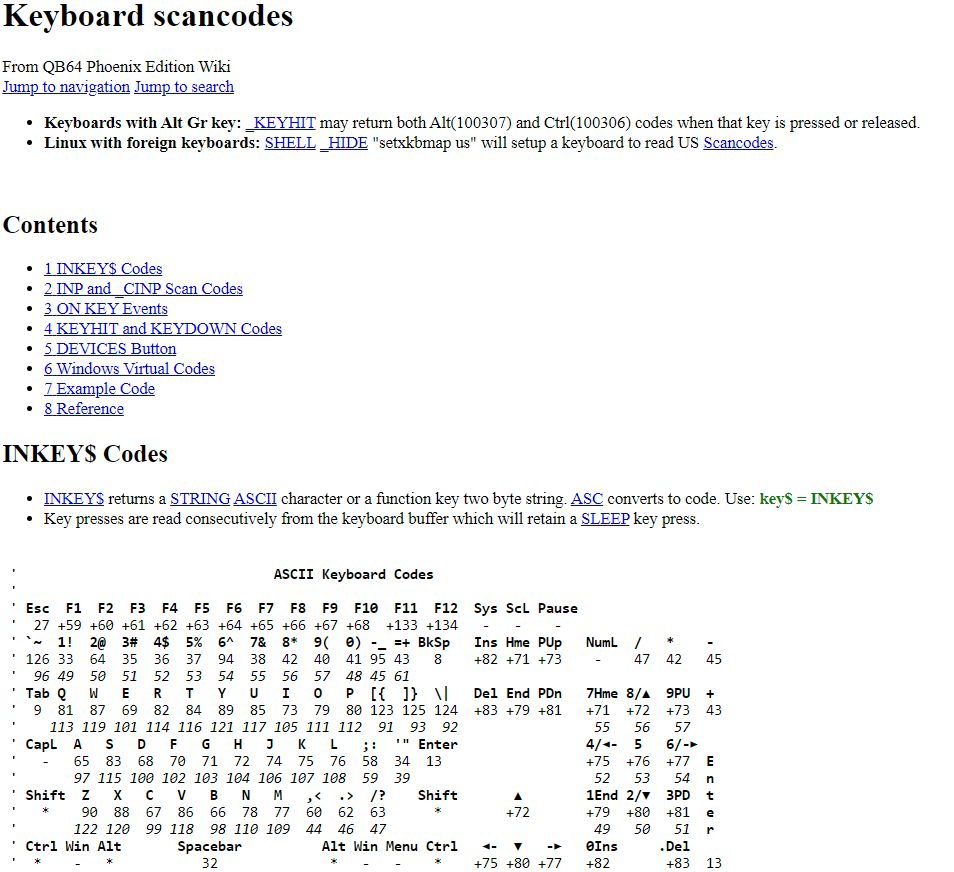

Pages aren't quite as pretty as what you'll find when you go to the wiki itself, as it doesn't have the templates to pull upon to format everything properly, but they're more than readable in my opinion. Below is the way the scancode page looks for me, in microsoft edge, as an example page.

If there's anyone who just wants the files themselves, without having to download the pages on their own (as they take about 10 minutes or so on my machine to download all of them), I've zipped them all up in a 7z archive, which is available via the attachment below.

So, I wanted a much simpler alternative, and thus I came up with this little solution:

Code: (Select All)

$Console:Only

DefLng A-Z

Const HomePage$ = "https://qb64phoenix.com"

ReDim Shared PageNames(10000) As String

NumberOfPageLists = DownloadPageLists 'As of mid 2022, there are only 2 pages listing all the page names.

'NumberOfPageLists = 2 'hard coded for counting without having to download pages repeatedly while testing code

PageCount = CountPages(NumberOfPageLists)

Print PageCount

t# = Timer: t$ = Time$

For i = 1 To PageCount

Cls

Print "Downloading... (Started at: "; t$; ")"

FileName$ = Mid$(PageNames(i), _InStrRev(PageNames(i), "/") + 1) + ".HTML"

FileName$ = CleanHTML(FileName$)

Print i; "of"; PageCount, FileName$

Download PageNames(i), FileName$

_Display

Next

_AutoDisplay

Print "FINISHED!! (Finsihed at: "; Time$; ")"

Print

Print Using "##,###.## seconds to download everything on this PC."; Timer - t#

Function CountPages (NumberOfPageLists)

FileLeft$ = "Page List("

FileRight$ = ").txt"

For i = 1 To NumberOfPageLists

file$ = FileLeft$ + _Trim$(Str$(i)) + FileRight$

Open file$ For Binary As #1

l = LOF(1): t$ = Space$(l)

Get #1, 1, t$

Close #1

start = InStr(t$, "<ul") 'skip down to the part of the page with the page listins

finish = InStr(start, t$, "</ul") 'and we can quit parsing when we get down to this point

p = start 'current position in file we're parsing

Do Until p > finish

p = InStr(p, t$, "<li><a href=") + 13

If p = 13 Then Exit Do 'we've parsed all the lists from the page. No need to keep going

p2 = InStr(p, t$, Chr$(34))

count = count + 1

PageNames(count) = Mid$(t$, p, p2 - p)

Loop

Next

CountPages = count

ReDim _Preserve PageNames(count) As String

End Function

Function DownloadPageLists

FileLeft$ = "Page List("

FileRight$ = ").txt"

FileCount = 1

CurrentFile$ = ""

url$ = "/qb64wiki/index.php/Special:AllPages" 'the first file that we download

Do

file$ = FileLeft$ + _Trim$(Str$(FileCount)) + FileRight$

Download url$, file$

url2$ = GetNextPage$(file$)

P = InStr(url2$, "from=")

If P = 0 Then Exit Do

If Mid$(url2$, P + 5) > CurrentFile$ Then

CurrentFile$ = Mid$(url2$, P + 5)

FileCount = FileCount + 1

url$ = url2$

Else

Exit Do

End If

Loop

DownloadPageLists = FileCount

End Function

Function CleanHTML$ (OriginalText$)

text$ = OriginalText$ 'don't corrupt incoming text

Type ReplaceList

original As String

replacement As String

End Type

'Expandable HTML replacement system

Dim HTML(200) As ReplaceList

HTML(0).original = "&": HTML(0).replacement = "&"

' HTML(1).original = "%24": HTML(1).replacement = "$"

For i = 1 To 200

HTML(i).original = "%" + Hex$(i + 16)

HTML(i).replacement = Chr$(i + 16)

'Print HTML(i).original, HTML(i).replacement

'Sleep

Next

For i = 0 To UBound(HTML)

Do

P = InStr(text$, HTML(i).original)

If P = 0 Then Exit Do

text$ = Left$(text$, P - 1) + HTML(i).replacement + Mid$(text$, P + Len(HTML(i).original))

Loop

Next

CleanHTML$ = text$

End Function

Sub Download (url$, outputFile$)

url2$ = CleanHTML(url$)

'Print "https://qb64phoenix.com/qb64wiki/index.php?title=Special:AllPages&from=KEY+n"

'Print HomePage$ + url2$

Shell _Hide "curl -o " + Chr$(34) + outputFile$ + Chr$(34) + " " + Chr$(34) + HomePage$ + url2$ + Chr$(34)

End Sub

Function GetNextPage$ (currentPage$)

SpecialPageDivClass$ = "<div class=" + Chr$(34) + "mw-allpages-nav" + Chr$(34) + ">"

SpecialPageLink$ = "<a href="

SpecialPageEndLink$ = Chr$(34) + " title"

Open currentPage$ For Binary As #1

l = LOF(1)

t$ = Space$(l)

Get #1, 1, t$

Close

sp = InStr(t$, SpecialPageDivClass$)

If sp Then

lp = InStr(sp, t$, SpecialPageLink$)

If lp Then

lp = lp + 9

lp2 = InStr(lp, t$, SpecialPageEndLink$)

link$ = Mid$(t$, lp, lp2 - lp)

GetNextPage$ = CleanHTML(link$)

End If

End If

End FunctionWith all of 130 lines of code, we fetch and save the whole wiki to disk!

NOTE: If you run this, this will save over 600 files into the same directory where this program is ran from! I'd suggest saving it into its own directly and running it from there! Complaining over a messy QB64 folder after this warning wil only get me to laugh at you.

Now, this creates a whole bunch of files with a *.HTML extension to them. You can click on any of these files and open them in your web browser, but you should know in advance that the links between them aren't going to work as they expect a specific file structure on the wiki and we're not providing that here. You'll have to click and open each file individually yourself.

There's a little more work with working with these files, but there's also a couple of large advantages as well:

1) Anytime you run the program, you'll know you have the most up to date version of information from the wiki.

2) You don't have to wait for someone to run HTTrack, grab a copy from the web, and share it with you.

3) The total size of this on disk is about 20MB -- not 1.2GB!! It's a helluva lot more portable!

Pages aren't quite as pretty as what you'll find when you go to the wiki itself, as it doesn't have the templates to pull upon to format everything properly, but they're more than readable in my opinion. Below is the way the scancode page looks for me, in microsoft edge, as an example page.

If there's anyone who just wants the files themselves, without having to download the pages on their own (as they take about 10 minutes or so on my machine to download all of them), I've zipped them all up in a 7z archive, which is available via the attachment below.